Apache Airflow is a data orchestration tool. It is used to build, schedule, and monitor workflows. Open source since its inception as a project at Airbnb in 2014, Airflow has an active community and is a leading authority in the field of job orchestration.

However, if you have reached this page, it's because Airflow has limitations and modern platforms are better equipped to handle Airflow's tasks, offering a simpler and more complete experience.

This article aims to list 8 open-source alternatives to Airflow: Luigi, Prefect, Dagster, Temporal, Kedro, Windmill, Mage AI, Kestra.

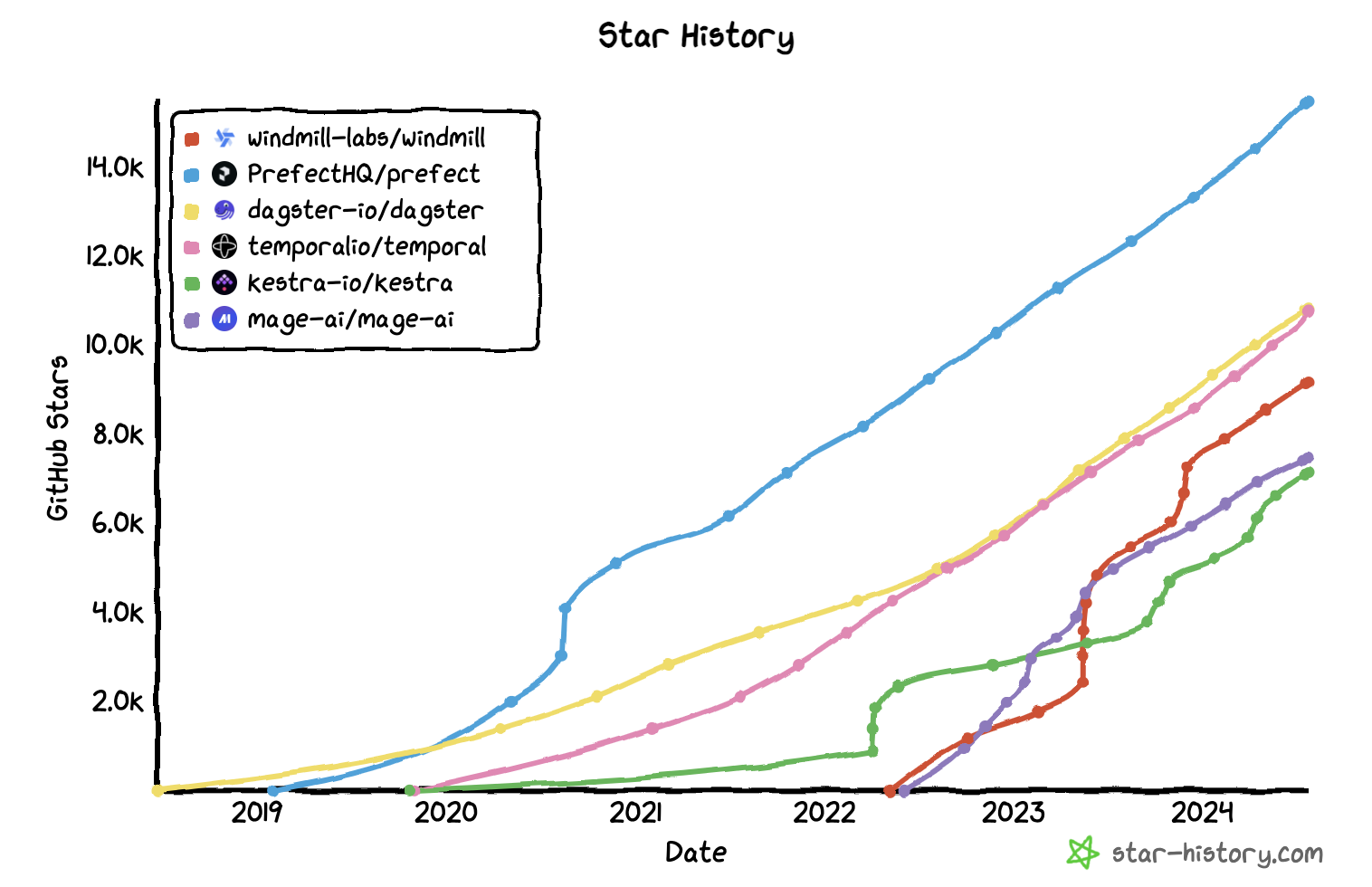

These job orchestration tools will be ranked in order of decreasing GitHub stars. This ranking method is far from perfect, but a mathematical criterion is a welcome addition to the subjective world of comparison.

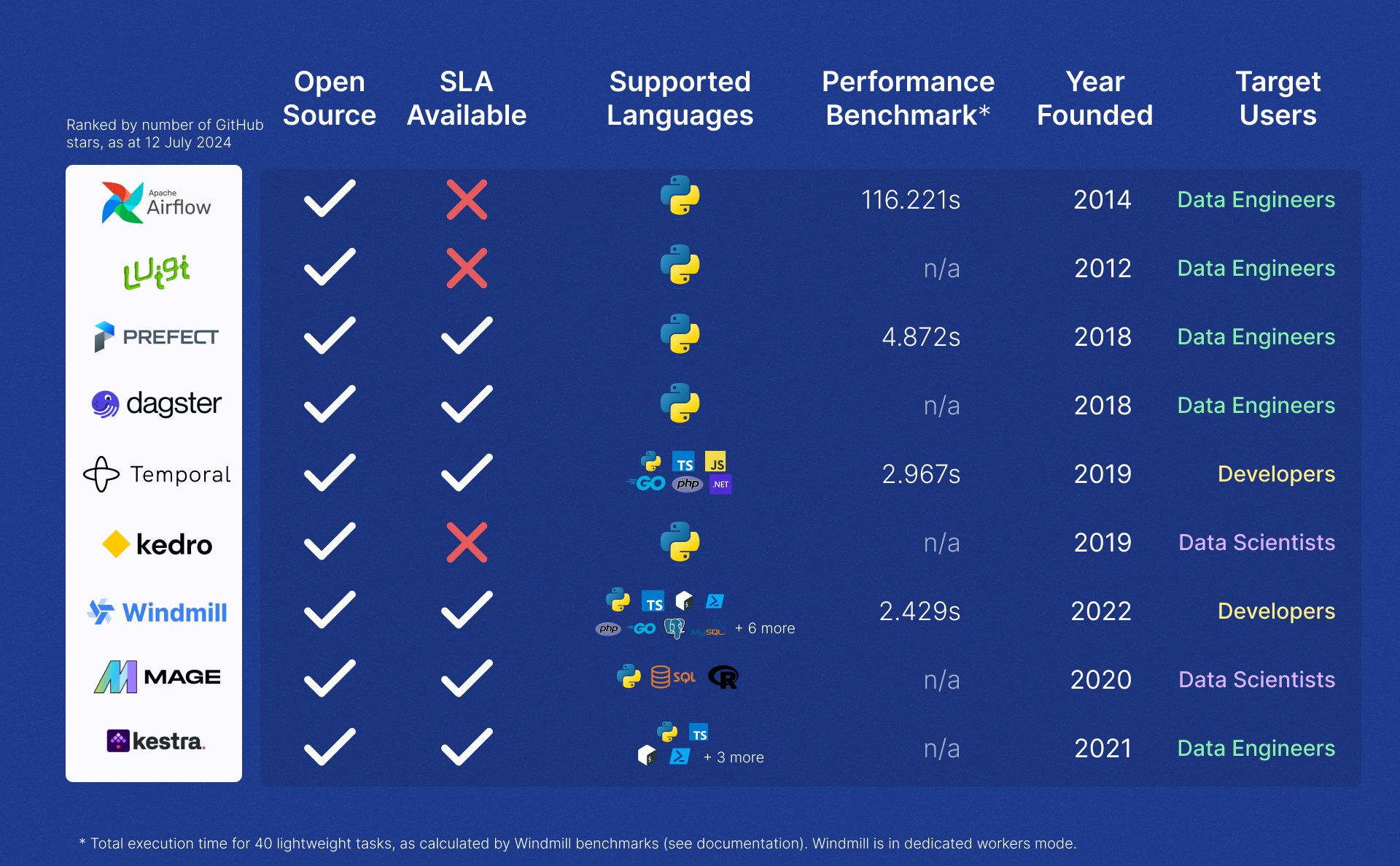

Before we begin, the first thing is to categorize the major aspects we will discuss:

- Target users: the audience for which the platform was designed.

- Ecosystem: nonprofit project or platform monetized by a private company.

- Use cases: typical cases for which the platform is a good fit.

- Performance: a few months ago at Windmill, we conducted a performance benchmark for some of these tools.

The rest of the criteria will come from our testing of each platform and user reviews.

Repos and stars:

| Repository | Stars | Section |

|---|

Star Wars, June 21 2018 - July 12 Edition

Airflow

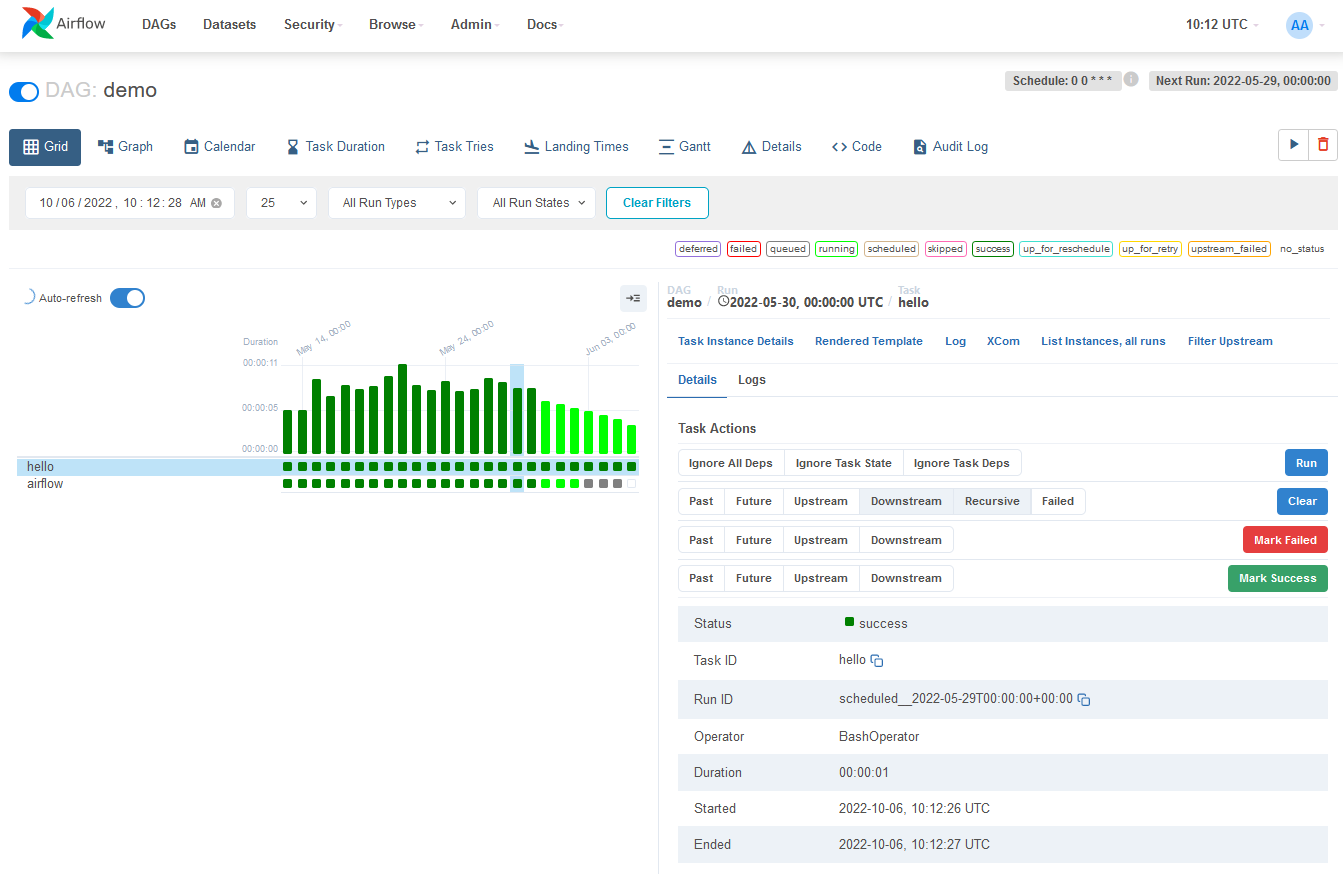

Apache Airflow is a data orchestration tool that is commonly used for automating, scheduling, and monitoring complex workflows. Designed to structure tasks as directed acyclic graphs (DAGs), it enables workflow management, ensuring dependencies are respected and tasks are executed in sequence. The tool is designed to manage data pipelines. According to a survey conducted by Airflow, 54% of its users are Data Engineers and 12% are Developers.

Airflow is widely used (forked 13.8k times at the time of writing) across various industries thanks to its broad ecosystem (documentation, tutorials). This is where Airflow's strength lies: the status quo. You will more easily find engineers with specific experience in the software, or users who have encountered the same problem as you.

However, Airflow is a complex tool, particularly in a production environment, it will require a minimum knowledge of devops and engineering systems. Its Python-first approach limits its versatility, and its user experience might make you feel like you are using a tool made in 2005, complicating the management of your workflow versions, permissions, or security. For example XComs, that Airflow uses to share data between tasks, is inconvenient and tricky to manoeuvre.

In terms of performance, Airflow is by far the slowest tool we have benchmarked, taking 56 seconds to complete 40 lightweight tasks.

At last, it's a community-driven project, so you'll have the advantages and disadvantages: the same community support as everyone else, no prioritisation of your issues.

Airflow is a great tool, but with the advent of more modern solutions (see below), the main reason to use Airflow is due to legacy issues and sunk costs.

Alternatives

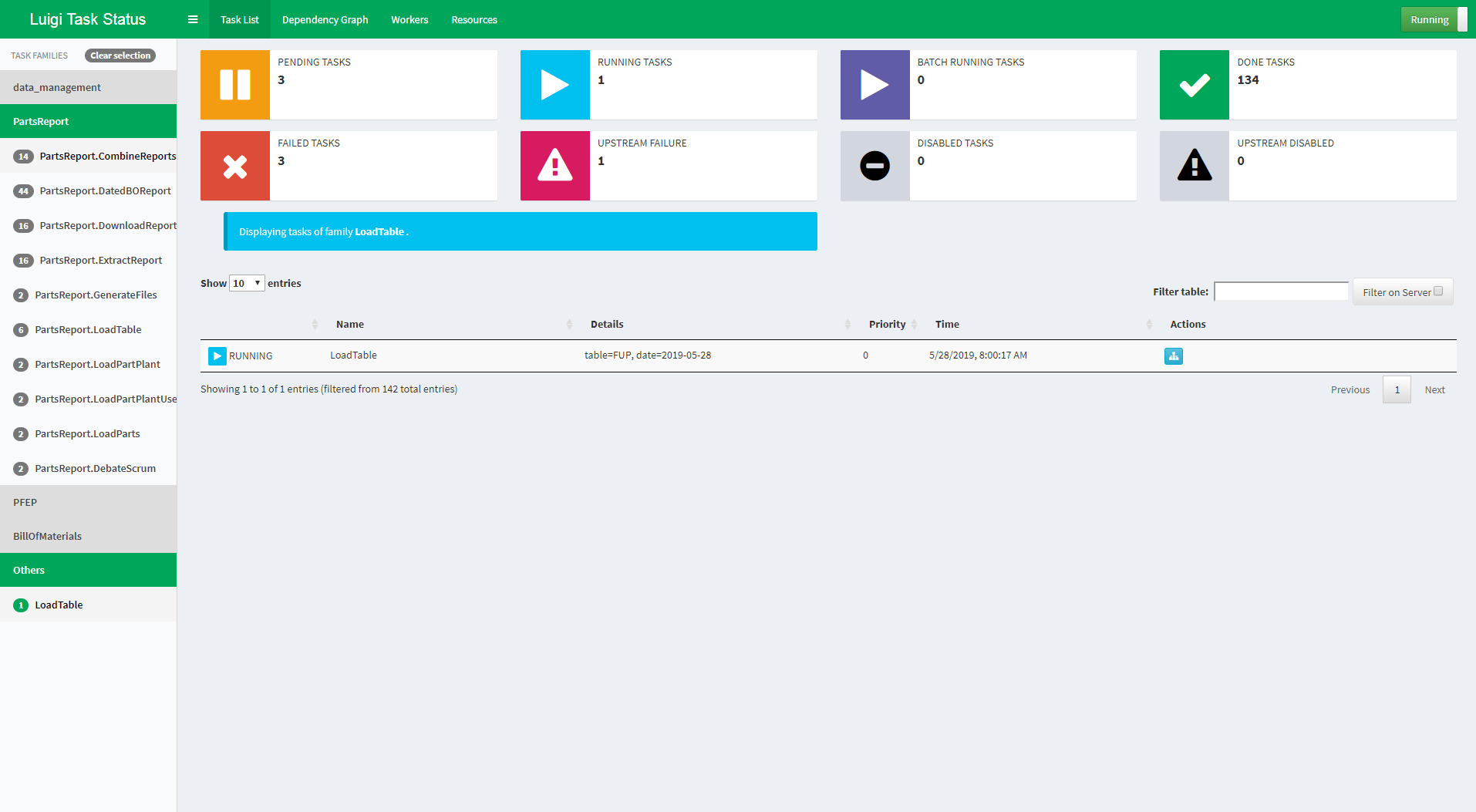

Luigi

Luigi is very similar to Airflow. It is a project developed by Spotify and open sourced in 2012. It is a Python module that helps build complex pipelines of batch jobs. It handles dependency resolution, workflow management, and visualization. Luigi uses DAGs and allows for the visualization of your data workflows.

Luigi enables you to create workflows, define tasks, and manage their dependencies, then execute the tasks via a central scheduler before monitoring your ETLs through a web-based interface.

There's no need to go too far; for similar strengths, Luigi has more weaknesses Airflow: a less active community, a narrower ecosystem, a more cumbersome UX, and performance issues.

Prefect

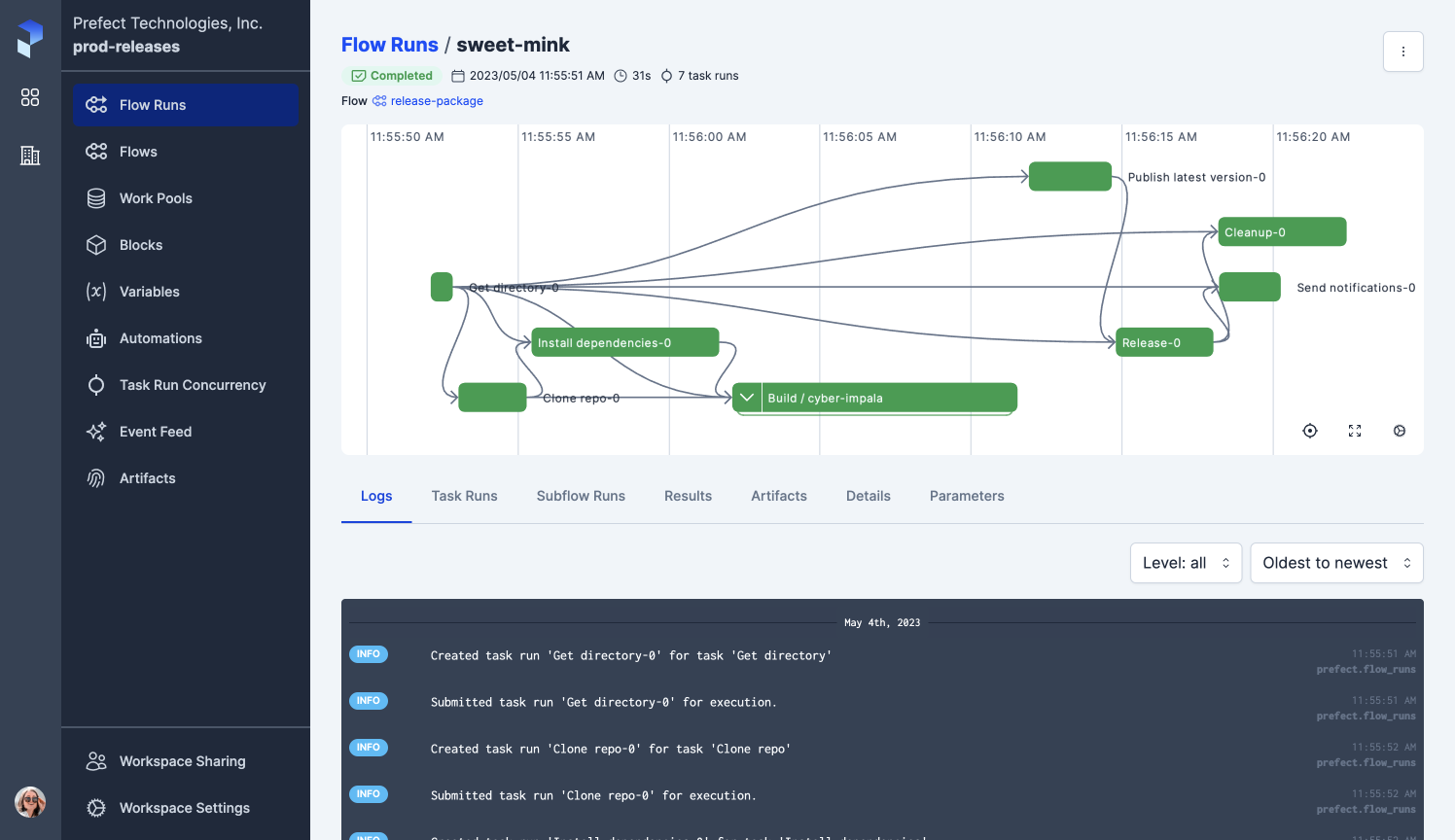

Prefect is a typical example of what a modern alternative to Airflow (and Luigi) looks like. Prefect is a workflow orchestration framework for building data pipelines. With a Python-first approach, Prefect is geared towards Data Engineers.

The main advantage of Prefect over Airflow is the intuitiveness of the platform: Prefect is devilishly simple and onboarding is easily accomplished. Besides that, you can expect the same features as Airflow (cron-based scheduling, retries, etc.)

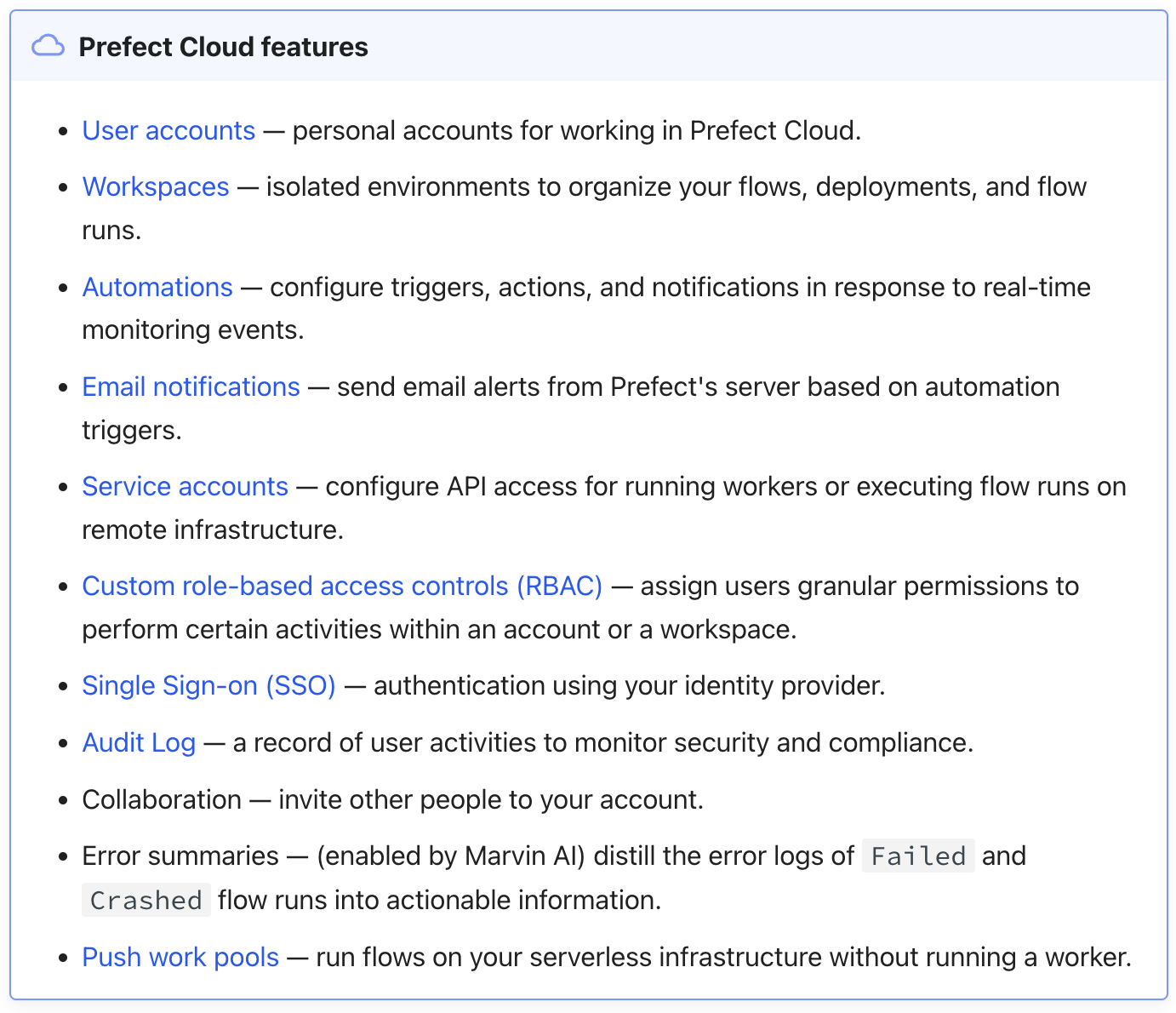

Prefect also goes further than Airflow in monitoring your tasks, but it's important to note that Prefect's monitoring is done through a cloud platform that requires subscription. Many of the features necessary for its proper functioning are only available under Prefect Cloud:

In terms of performance, Prefect is the second slowest job orchestrator we have benchmarked (alongside Airflow, Temporal, and Windmill) but it is much faster than Airflow and closer to the best-in-class (taking 4.872 seconds to complete 40 lightweight tasks, compared to 56 for Airflow and 2.429 for Windmill Dedicated worker).

To learn more about Prefect, you can check out our comparison between Prefect and Windmill.

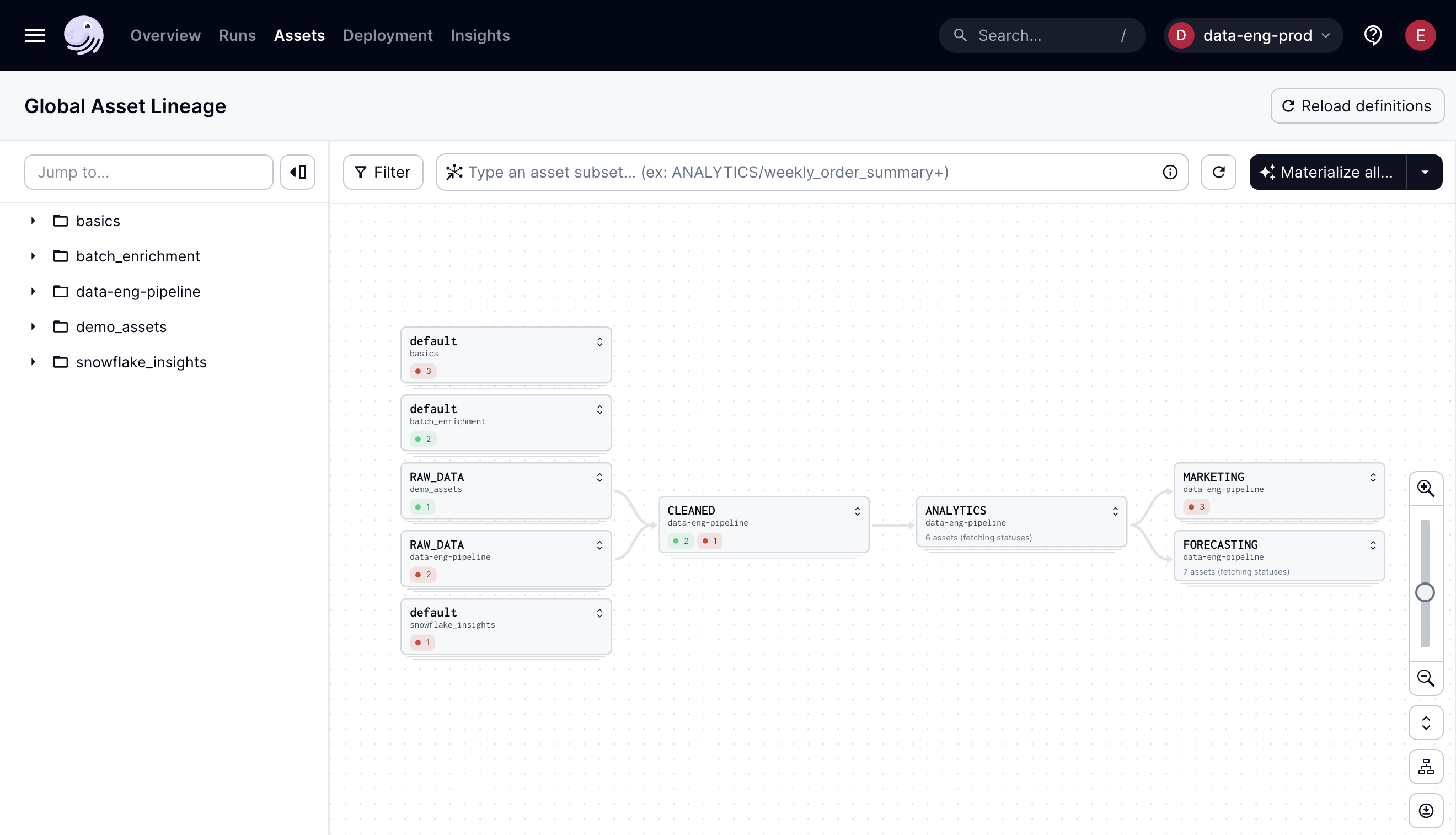

Dagster

Dagster is an open-source data orchestrator designed for building, running, and observing data pipelines. Unlike Airflow, which primarily focuses on scheduling and executing predefined workflows, Dagster introduces a stronger emphasis on development workflows, testing, and maintenance of data pipelines. This is achieved through a type system that validates data as it moves between tasks, improving reliability and error handling.

Dagster distinguishes itself from Airflow by embracing a declarative, asset-based approach to orchestration, focusing on the outputs like tables and models, rather than just tasks

For developers, Dagster offers a smooth experience, facilitating easy transitions between different environments and managing data assets more effectively. Additionally, Dagster is optimized for cloud and container environments, enabling more efficient dependency isolation and infrastructure management compared to Airflow's often cumbersome setup.

If we were to compare Dagster with Prefect, perhaps Dagster is a bit more focused on Data Engineers, while Prefect is also suitable for developers. Lastly, we have read several times that the community finds Prefect's documentation to be lagging behind that of Dagster. If you want to compare Dagster to Prefect, you can check out this article from Dagster, or this one from Prefect.

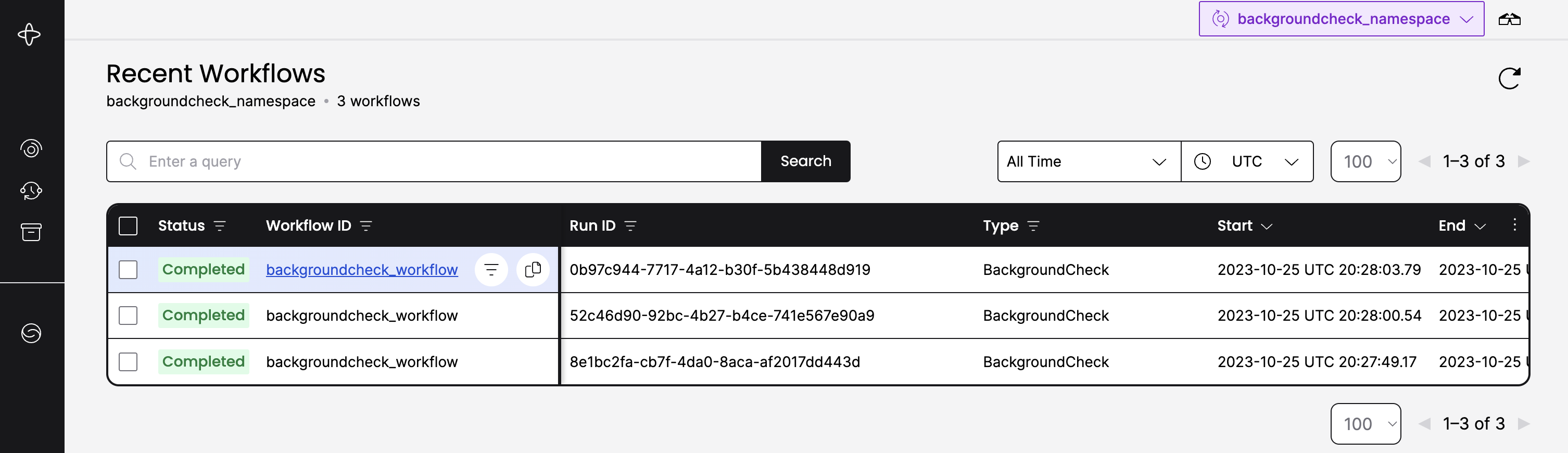

Temporal

With Temporal, you move into the realm of developers, moving away from the domain strictly reserved for data engineering. Temporal is a runtime that aims to ensure the durable execution of your application code, notably through fault tolerance (Temporal promises to "reduce failure by 10-100x").

Compared to Airflow and the tools mentioned above, Temporal's major differentiation is that it targets developers. This is primarily reflected in:

- Polyglot: you are no longer limited to Python (supports Go, Java, Python, TypeScript, php, .NET).

- More versatility in use cases. Can be used for data pipelines or daily operations, even though it means you may sometimes need to code the specifics of your tools (data connectors, notifications, etc.) that you would have had with Dagster or Prefect.

- The simplicity of using a single tool, and avoiding the multiplication of specialized tools with their own set-up and onboarding.

Managing deployments is significantly easier for Temporal Workflows compared to Airflow DAGs. In Temporal, Workflows and Activities are packaged within the Worker, there can be multiple of those. This allows for greater flexibility and isolation between projects. In contrast, Airflow supports only one type of worker. Consequently, if multiple projects use the same Airflow deployment, they cannot have conflicting dependencies, as this would lead to issues.

One notable thing about Temporal is that it is a for-profit company (compared to Airflow or Luigi) and large in size (compared to Prefect, Dagster, or Windmill) with over 200 employees.

In terms of performance, Temporal performs very well according to benchmarks conducted by Windmill. Temporal ranks as the second fastest workflow engine, behind Windmill's dedicated worker mode.

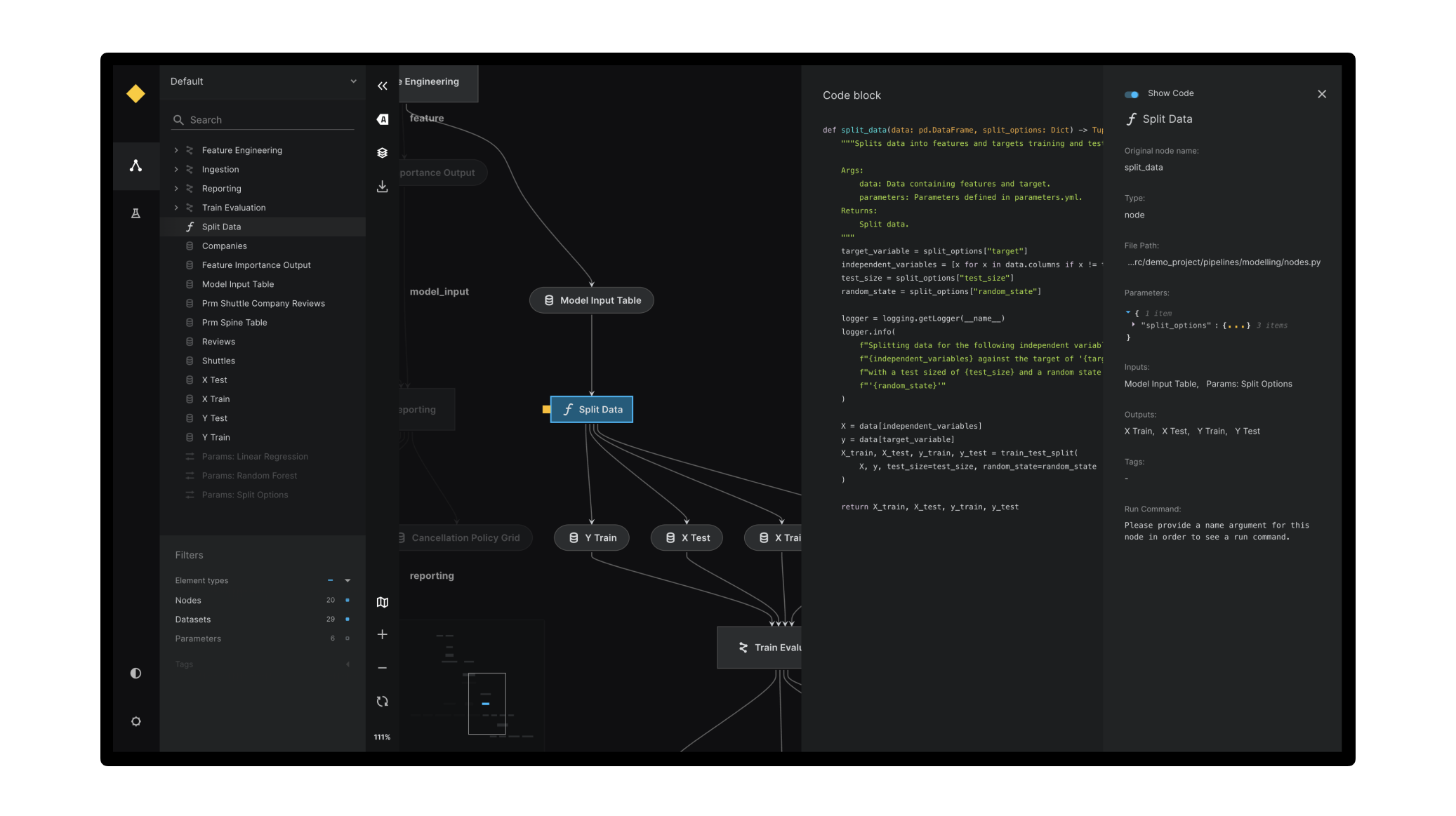

Kedro

With Kedro, we have another open tool sourced by a large company (McKinsey's QuantumBlack in 2019) and is now maintained as a non-profit.

Unlike Airflow and the previously mentioned tools, Kedro targets a different audience: data scientists, specifically for creating machine learning workflows.

Kedro facilitates data versioning, supports incremental computations, and automates the resolution of your pipeline's execution order. When used alongside Kedro-Viz, it offers a comprehensive view of your evolving data and machine-learning workflows. This combination provides clear data lineage, monitors machine-learning experiments, and simplifies communication with ops teams by visually mapping out the processes. What Kedro offers is to have usable data science code while spending as little time as possible on the infrastructure part.

Asked if it's an orchestrator, Kedro replies:

"Orchestrators like Airflow, Luigi, Prefect, Dagster, Flyte, and Kubeflow focus on running, scheduling, and monitoring pipelines. Meanwhile, Kedro is all about the process of authoring pipelines. So, if you're looking for a solution that takes care of questions like "What time will this task run?" or "How do I manage my compute?", Kedro might not be what you need."

[...]

Kedro provides a set of deployment guidelines for using orchestrators as deployment targets. If you're looking for a partner to help you production-ready data science code, Kedro is the perfect solution for you."

Windmill

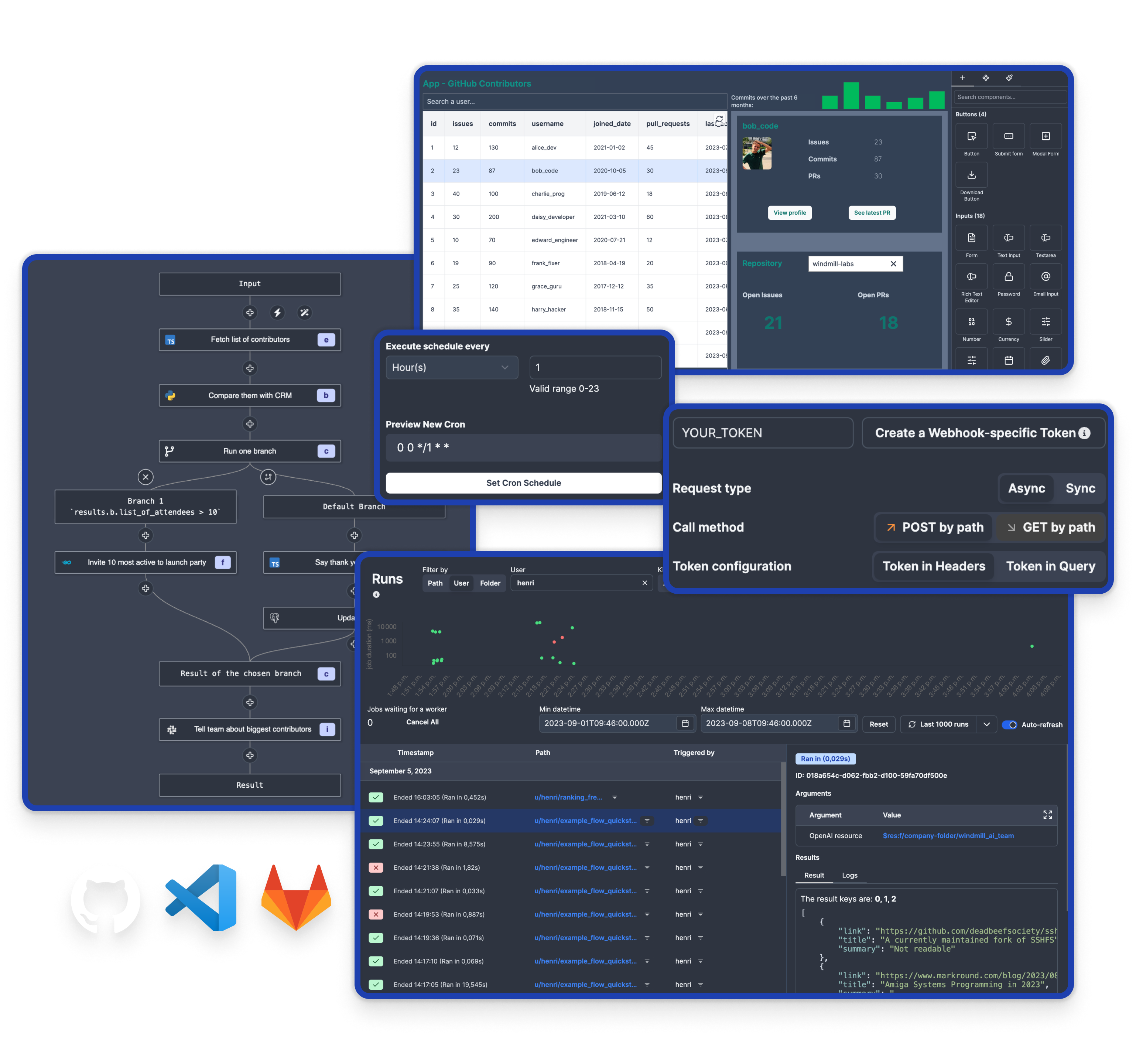

Windmill is an open-source workflow engine and developer platform designed to build internal tools, including endpoints, workflows, and UIs. It supports coding in multiple languages such as TypeScript, Python, Go, Bash, SQL, or any Docker image, alongside low-code builders.

Windmill was designed by developers for developers, ranging from semi-technical (low code builders) to senior/staff software engineers with high standards for production-grade yet flexible and customizable with code. Windmill was built to address the challenge of turning high-value code containing business logic, data transformation, and internal API calls into scalable microservices and tools without the usual heavy lifting.

On the other hand, the support of Python as a primary language and the integration of a workspace with object storage (in particular, S3) make Windmill an excellent fit for data engineers, particularly for building data pipelines.

Windmill has three editors (or products), all compatible, each independently functioning:

- The Script Editor is an integrated development environment that allows you to write code in various languages like TypeScript, Python, Go, Bash, SQL, or even run any Docker container through Windmill's Bash support.

- The Flow Editor is a low-code builder that enables you to create workflows represented as directed acyclic graphs (DAGs), orchestrating the execution of steps across different workers while respecting dependency constraints.

- The App Editor is a tool for creating customized, user interfaces using a drag-and-drop editor, allowing you to build data-centric dashboards.

These editors can be mixed together in Windmill, as scripts created in the Script Editor can be used as steps in flows built with the Flow Editor, and both scripts and flows can be integrated into apps created with the App Editor, providing a comprehensive platform for building internal tools, endpoints, and workflows.

Click on each item to learn more.

Compared to Airflow and the solutions mentioned here, Windmill stands out in the following ways:

- Comprehensiveness: Windmill supports multiple languages and serves as an all-in-one platform for iteration, triggers (job scheduling, webhooks etc.), and deployments.

- Developer Experience: Windmill offers features like local development, testing flows step by step, or running full flows in the web UI.

- Enterprise-ready: It includes secret management, permission sharing, transitions between multiple environments, and horizontal scalability.

- Performance: Windmill is the fastest workflow engine for both short and rapid tasks. See this benchmark for the methodology.

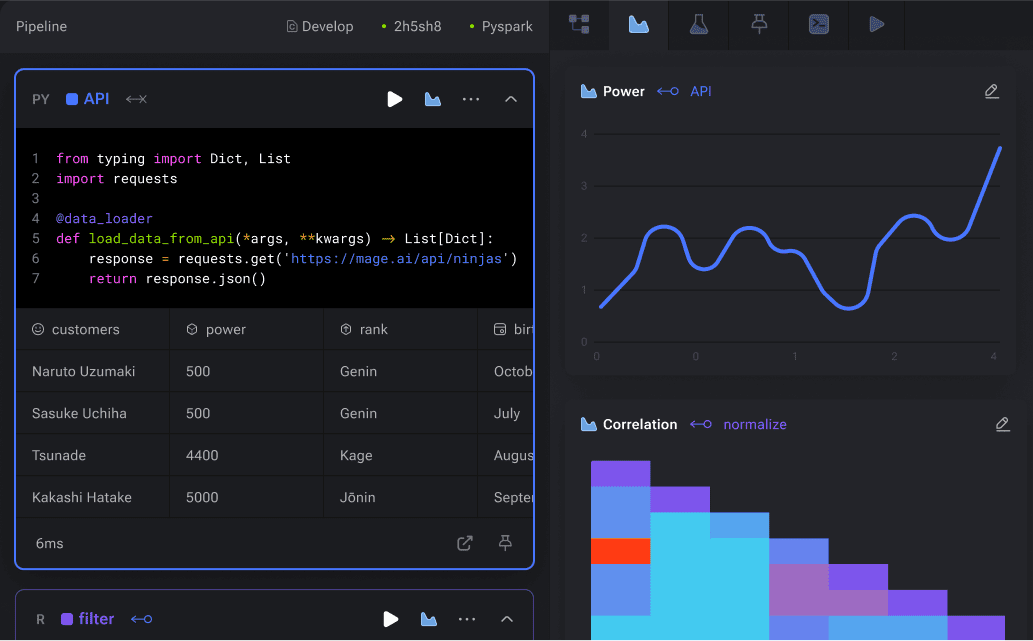

Mage AI

Mage is a newcomer that recently emerged in 2020, founded by former engineers from Airbnb (what a coincidence). Mage shares the same promise as Prefect or Dagster: to be a modern solution aiming to replace Airflow (they mention Airflow 5 times on their landing page alone).

Mage focuses on simplicity with a pretty and intuitive UI, and on speed with real-time processing.

Mage will be a suitable tool for data scientists and data engineers who prioritize ease of use to focus on managing data pipelines.

Kestra

At last, you can take a look at Kestra, the youngest of these alternatives to Airflow. Founded in 2021, Kestra is an event-driven orchestration platform to manage business-critical workflows declaratively in code. Kestra has a declarative YAML syntax and all operations are driven through its API.

For more details on the comparison with Airflow, check out this article published by Kestra.

Conclusion

Let's not beat around the bush: there are more modern and more efficient tools than Airflow. The only reasons to stick with Airflow are legacy, or a form of purism (its more efficient alternatives are maintained by private companies, although they are all open source).

To find the right tool, it's important to identify your needs: what skills are available internally (incl. mastered languages), performance at scale, whether you want to do just data processing or also build a stack of internal tools around, whether you are ready to handle onboarding on a complex product, and whether you might need support (and a provider accountable to you if you are stuck).

The good thing is that all these alternatives are open source and easily self-hostable, so you can make up your own mind for free, and without receiving emails from overly wilful salespeople.

At last, your internal infrastructure constraints will be an important criterion, for example, Prefect, Dagster, and Kestra might be used for the same purposes, but they have different infrastructures. I suggest you take a look at the documentation for each software to find out more.

Please note that one of the solutions shown (Windmill) presented you with this article, with all that that implies in terms of subjectivity. Please contact us if you find any inaccuracies or would like to contribute. Feel free to make a PR on windmilldocs (open source to the end).

You can self-host Windmill using a

docker compose up, or go with the cloud app.

/filters:no_upscale()/news/2022/03/kestra-orchestration-platform/en/resources/1kestra1-1648653030094.jpg)